When doing product or pricing optimization work, a classic research challenges is choosing between conjoint vs maxdiff analysis.

Both are excellent market research methods for letting you understand ideal feature sets or pricing to maximize purchase interest. However, they yield very different results. By looking at the difference in how each method is set up, we can isolate the best one to answer a particular business need.

Comparing Conjoint vs MaxDiff Against Each Other

Both conjoint and maxdiff studies isolate the preferences for certain features or benefits. However, when we look at how we go about setting up each study, the inputs used with the methods, and how respondents engage with each one, we see how they stand apart.

What A Conjoint Analysis vs. A MaxDiff Analysis Does

A MaxDiff is a relatively simpler approach. It compares high-level sets of features, messages, or other variables against each other. For instance, let’s take a CPG company who wants to optimize soda purchase intent. With a MaxDiff, this means asking about the relative importance of soda-related features. This includes things like: comes in a 20 oz bottle, is sugar free, has less carbonation, or other soda-related variables.

In contrast, a conjoint analyses is more complex. It tests both relative importance of key product features and desired levels within those features. Let’s use the soda example again. In this case, we still test the relative importance of price, size, and flavor in a purchase decision. At the same time, we can look at the appeal of different levels within these features. For instance, a price point of $1.99 vs. $1.59 vs. $.99.

| MaxDiff | Conjoint | |

|---|---|---|

| WHAT IT DOES |

|

|

When Is It Ideal To Use A Conjoint vs A MaxDiff

MaxDiff studies assess the relative importance of features or messaging. They do not let you test if any given feature is truly unappealing or if harms purchase decisions. This makes MaxDiff great for relatively simple products with limited components.

Meanwhile, a Conjoint study’s complexity allows it to answer additional sets of questions. These include identifying ideal bundling of features and levels as well as negative purchase intent triggers.

| MaxDiff | Conjoint | |

|---|---|---|

| IDEAL TO USE WHEN… |

|

|

How The Input Criteria Differ Across The Two Options

Both of these approaches are essentially trade off studies. This means respondents compare different features or levels against each other and select their preferred option. As a result, they must offer enough features or levels to really allow for active trade offs.

When fielding a MaxDiff, aim for 12-20 features. In contrast, a conjoint study works best with 3-8 unique features 2-7 levels for each feature.

| MaxDiff | Conjoint | |

|---|---|---|

| INPUT CRITERIA |

|

|

Reviewing Differences In The MaxDiff vs Conjoint Respondent Experience

The respondent experience varies greatly depending on which type of study they take.

Max Diff Style Questions

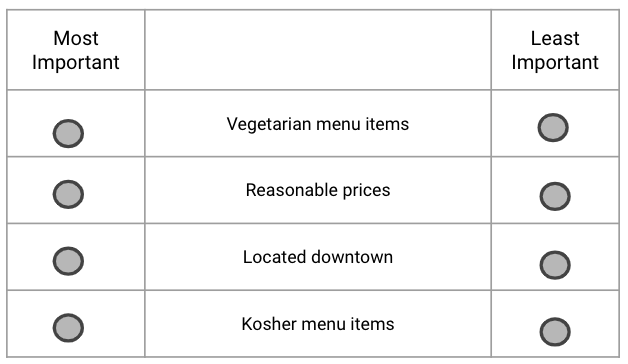

MaxDiff survey questions ask individuals to pick the most important and least important factors when making a decision.

Let’s take a look at the prompt below. The question reads, “Based on the list of features below, which of the features is the most important and least important when selecting a restaurant?” Respondents see a table that lets them evaluate different features. They then select the most and least important of just those features on the screen.

Once the respondent selects their choices, the table refreshes. The respondent may see completely new features or a mix of new and old features. They once again pick the most and least important features in their decision process. This process repeats many times for each participant.

Conjoint Style Questions

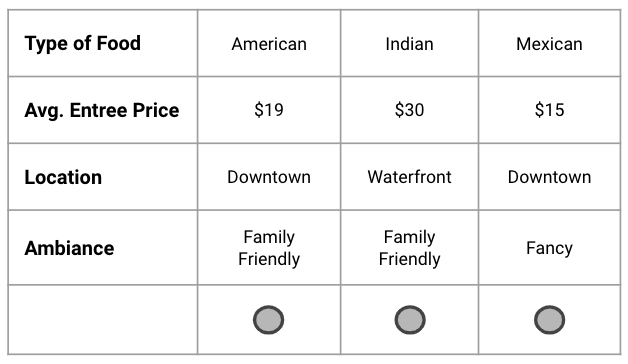

Conjoint questions ask respondents to select their preferred product based on the features it bundles together.

Let’s take a look at the conjoint prompt below. The question reads, “Given the restaurants you see below, which would you be most likely to visit?” The respondents see options that mix and match different features. They then must select the bundle they most prefer.

Once selected, the table refreshes. Respondents then see a new set of bundles and once again select their favorite. This bundle re-shuffling repeats many times for each participant.

How MaxDiff vs Conjoint Outputs Differ

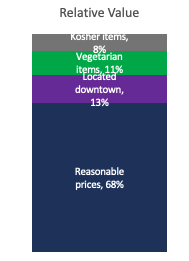

Both types of studies produce relative utility measures for each feature. That is, you’ll be able to visualize how much any given feature impacts a purchase decision. With MaxDiff, you see each feature or benefit’s overall impact on a purchase decision.

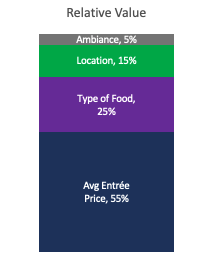

In the case of the Conjoint, you’ll start by seeing how much each feature impacts the purchase decision.

In the case of the Conjoint, you’ll start by seeing how much each feature impacts the purchase decision.

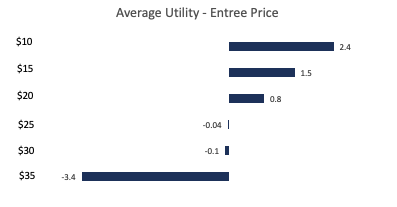

However, the conjoint also offers far more granular information as well. For starters, it produces the average utility of each feature’s levels. This means you can see the impact each level within a given feature has on purchase intent or alienation

In the example below, we see that a $10 entree has a positive impact on purchase. Customers also have positive interest in entrees priced at $20, but the interest isn’t as strong. However, once the entree price reaches $25, purchase alienation sets in.

Additionally, you can isolate what the ideal product bundle looks like. That is, what set of levels within each feature that will increase the chance of a customer making a purchase.

Determining If You’re Ready For A Conjoint or MaxDiff Study

Either of these studies require one critical set of inputs: the discrete set of features (or levels) you want to evaluate. As a result, you’re only ready for either type of study when you know the features and levels that make sense to test.

Usually, that means product concept tests, fairly high-level assessments to get a general sense of a product’s appeal (or lack thereof), have been performed. If there is general interest, then further testing and optimization makes sense. However, if you haven’t done this step first, you’re likely not ready to do either a Conjoint or MaxDiff. You’ll end up working on product refinement without first gauging general product interest.