Positioning research has always focused on one question: What resonates with human buyers? You test message clarity, measure differentiation, and gauge emotional appeal. The goal is finding language that converts prospects into customers.

But there’s a new variable in the equation. AI systems now sit between your positioning and your prospects, summarizing your value proposition, comparing you to competitors, and recommending solutions.

If your messaging doesn’t work for these systems, you’re getting filtered out before human buyers even see you.

Traditional positioning research doesn’t account for this. It tells you what humans prefer, but it can’t tell you whether AI systems will surface your brand or skip over you entirely. To avoid being invisible in AI-mediated discovery, you need a research approach that tests for both audiences simultaneously.

Why Traditional Positioning Research Falls Short

Classic positioning research tells you what resonates with human buyers. It’s critical, but no longer sufficient.

Here’s what’s missing: zero consideration for how AI systems will interpret, summarize, and repeat your positioning.

Think about how traditional positioning tests work. You ask buyers which messages are most compelling, or perhaps test taglines for memorability. You may also measure whether your brand sounds different from competitors. The assumption is that once you nail human appeal, your job is done.

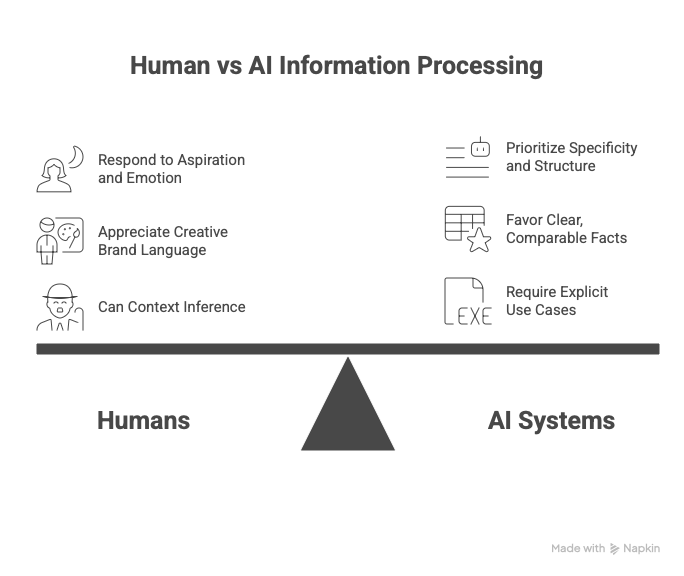

However, that’s only half the game now. AI systems consume information differently than humans:

- Humans respond to aspiration and emotion. AI systems prioritize specificity and structure.

- Humans appreciate creative brand language. AI systems favor clear, comparable facts.

- Humans can infer context. AI systems need explicit use cases and audience descriptors.

The consequence? Even well-positioned brands are getting filtered out of AI-generated recommendations.

Let’s look at two positioning statements. One human-optimized and one AI-optimized:

- Traditional positioning (human-optimized): “Empowering finance teams with industry-leading innovation and intuitive workflows.”

- AI-aware positioning: “Reduces month-end close from 10 days to 3 days for finance teams at 100-500 person companies using NetSuite or QuickBooks.”

Both might score well in a traditional message test. Humans might even prefer the first one for its aspirational feel. But guess which one gets picked up when a CFO asks ChatGPT: “What tools help mid-sized companies close their books faster?” The second one. Every time.

Why? Because it contains the elements AI systems look for: specific outcome (10 days to 3 days), clear audience (finance teams at 100-500 person companies), and relevant context (NetSuite or QuickBooks users).

Traditional positioning research doesn’t test for any of this. And that’s a problem, because an increasing percentage of buyer journeys now start with an AI prompt, not a Google search.

The AI-Aware Positioning Framework

Testing positioning for both human buyers and AI systems requires a phased approach that extends traditional research methods. Here’s how to structure it.

Phase 1: Positioning Hypothesis Development

Start with your current positioning and key messages. For each core message, create two distinct variants that you’ll test in parallel.

The first variant should be human-optimized. This version leads with emotional hooks, aspirational language, and your brand voice. It’s designed to resonate, inspire, and differentiate in the ways traditional positioning is meant to do.

The second variant should be AI-optimized. This version emphasizes specific outcomes, quantifiable benefits, clear use cases, and explicit target audience descriptors. It’s built to be parsed, compared, and surfaced by AI systems.

Don’t assume you need to choose between them. In reality, you’ll likely need both variants deployed in different contexts across your marketing. The research will tell you where each performs best.

Phase 2: Dual-Context Testing (Qualitative)

This is where most positioning research stops short. You need to test in two distinct contexts to understand how your messages perform.

Part A: Human Response Testing

Conduct in-depth interviews with 8-12 buyers from your target segments. Run through your standard message testing protocol: clarity, differentiation, relevance, purchase intent. But add one critical question at the end: “If you were explaining what we do to a colleague, how would you describe it?”

This question reveals which language actually sticks and gets reused. The phrasing that humans naturally repeat when explaining your solution is often the same language that AI systems will pick up and echo in their responses. It’s a leading indicator of machine-friendly messaging.

Part B: AI Simulation Testing

This is new territory for most researchers, but it’s straightforward. Take your positioning variants and run them through actual AI search queries that your prospects would use. Try queries like, “What solutions help [job title] achieve [outcome]?” or “Compare tools for [use case] in [industry].”

The goal isn’t to see if your brand appears. It likely won’t yet. Instead, you’re validating your positioning hypotheses against what AI systems actually surface. Document which brands do appear and how they’re described. What language patterns show up repeatedly? What specific outcomes, use cases, or audience descriptors are emphasized? Which competitive differentiators get highlighted?

This tells you whether your positioning direction aligns with how AI systems are categorizing and presenting solutions in your space. If your AI-optimized positioning emphasizes elements that never appear in AI responses, that’s a red flag. If it mirrors the patterns AI systems are already using, you’re on the right track.

Phase 3: Quantitative Validation

Once you’ve refined your positioning through qualitative testing, it’s time to validate at scale. Survey 200-400 respondents in your target segments, presenting your positioning variants in a structured message test.

You’ll measure traditional metrics like purchase intent, preference, and differentiation. But layer in AI-relevant dimensions as well. This includes:

- Ask respondents to rate statements like “This describes exactly who should use this solution” to measure specificity.

- Test “I could easily explain what problem this solves” to gauge re-statability.

- Measure “The benefits are concrete and measurable” to assess quantifiability.

These additional metrics tell you whether your positioning has the structural elements that both humans and AI systems need to understand, remember, and repeat your value proposition accurately.

The Positioning Language Elements That Work for Both

The good news is that many positioning elements appeal to both human buyers and AI systems. The key is understanding which specific language patterns serve both audiences effectively.

1. Specific use cases with clear job titles and industries

Instead of “For growing businesses,” try “For CFOs at 50-500 person SaaS companies.”

Why it works: Humans immediately know if they fit the profile, which improves relevance and connection. AI systems use these descriptors to categorize your solution and match it to relevant queries. When someone asks “What tools help SaaS CFOs?” you’re in the consideration set.

2. Quantifiable outcomes

Instead of “Improves efficiency,” try “Reduces month-end close from 10 days to 3 days.”

Why it works: Humans want proof that your solution delivers real results. Specific numbers make claims credible and memorable. AI systems can compare these quantified claims across vendors, and they’re more likely to surface solutions with concrete metrics over vague promises.

3. Problem-solution pairing with context

Instead of “Streamlined workflows,” try “When sales ops teams manage Salesforce data for 50+ reps, our tool eliminates duplicate entries and auto-enriches contact records.”

Why it works: This structure tells humans exactly what pain point you solve and whether it matches their situation. For AI systems, the problem-solution-context format provides the full picture needed to accurately recommend your solution when prospects describe similar challenges.

4. Comparative differentiators (not generic claims)

Instead of “Better security features,” try “Only platform with SOC 2 Type II + HITRUST certification for healthcare data.”

Why it works: Humans appreciate unique capabilities they can verify, especially in competitive categories. AI systems treat factual differentiators as reliable data points that can be validated and compared, making them more likely to cite these specific attributes.

Red flags to avoid: Watch out for positioning elements that sound good to marketing teams but fall flat with both buyers and AI systems. These include:

- Superlatives without proof like “best,” “leading,” or “innovative” mean nothing without supporting evidence.

- Undefined jargon that requires insider knowledge creates confusion rather than clarity.

- Emotional-only positioning that lacks any functional grounding leaves both humans and AI systems unable to understand what you actually do.

- Vague audience descriptions like “forward-thinking enterprises” don’t help anyone determine if your solution is right for them.

Bringing The Research To Life

Once you’ve tested and validated that your positioning supports AI search visibility, implementation requires treating different contexts differently.

Contexts like website metadata, product descriptions, and case study abstracts need the language that helps systems categorize and recommend your solution. This is where initial discovery fueled by AI happens, so make these materials AI-ready.

Meanwhile, contexts like campaign creative, sales presentations, and brand storytelling can lean into aspiration and emotion. This is where consideration happens, which means persuading humans is the new focus.

Hybrid contexts like homepages and product pages need both. Lead with the specific proof points that work for AI, then layer in the emotional hooks that move humans to action.

The goal isn’t choosing between human appeal and AI optimization. It’s understanding which version of your positioning belongs in which context, and having the research data to back up those decisions. Without testing for both audiences, you’re guessing. And in a world where AI increasingly shapes consideration sets, that’s a costly gamble.